This is the kind of setup you need on Google scale, but the point is the simplicity. Pushes the data into the global instance -> Simply collects and stores the data on global scale Pushes the data into the regional Prometheus -> 1. To save the words, consider this monitoring setup: Datacener Prometheus Regional Prometheus Global Prometheus 1. The second option (adding these labels with Prometheus as a part of scrape configuration) is what I would choose. Well more specifically it calculates a sum for every instance and then just drops those with a wrong version, but you got the idea. It calculates a sum of node_arp_entries for instances with node-exporter version="0.17.0". Could someone please suggest how I should store this region and env information? Are there any other better ways? So, not sure if this is a good way to do it.

Storing region and env info as labels with each of the metrics (not recommended: ).I found a few ways to do it, but not sure how it's done in general, as it seems a pretty common scenario: How do I store this region information which could be any one of the 13 regions and env information which could be dev or test or prod along with metrics so that I can slice and dice metrics based on region and env? Let's say I've defined a metric named: http_responses_total then I would like to know its value aggregated over all the regions along with individual regional values.

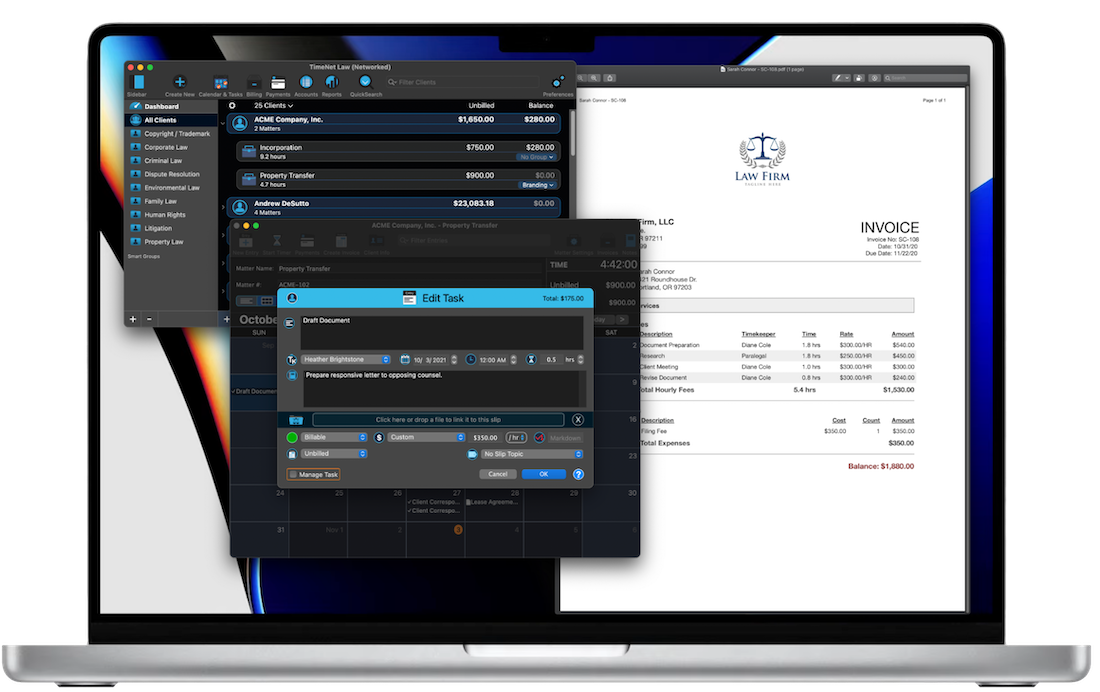

TIMENET DEEP RECURRENT DOWNLOAD SERIES

There is a central server which will scrape the /metrics endpoints across all the regions and will store them in a central Time Series Database.

TIMENET DEEP RECURRENT DOWNLOAD CODE

I'm trying to instrument the application code with Prometheus metrics client, and will be exposing the collected metrics to the /metrics endpoint. I've an application, and I'm running one instance of this application per AWS region.

0 kommentar(er)

0 kommentar(er)